Backlink research does not have to start with expensive SEO platforms or complex APIs. Many small businesses and agencies can uncover real link opportunities just by scraping a handful of competitor pages and top‑ranking articles. A simple page scraper turns any URL into readable text and link data you can use for smarter outreach.

At Backlink Phoenix, this kind of lightweight scraping is part of the first week of almost every campaign. Instead of guessing where to build links, we study what already works in your niche: who gets linked, what content earns those links, and which sites keep appearing across multiple pages. That insight is enough to build a focused, realistic backlink plan—without a big‑tool budget.

Why scraping beats manual backlink hunting

Manual backlink research usually means clicking through dozens of articles, scrolling forever, and copy‑pasting URLs into spreadsheets. It is slow, easy to abandon, and you miss patterns that only show up when you look at pages side by side. A simple scraper lets you grab headings, text, and outbound links from a page in seconds, so you can analyze 10–20 URLs in one focused session instead of over several weeks. This matters especially for new or smaller sites. You do not need to track the entire internet; you just need to see what the top players in your niche are doing. Scraping a short list of competitor blog posts, resource pages, and guides is often enough to reveal where links come from and what kind of content attracts them.

Step 1: Scrape competitor articles for outbound link patterns

Start with 5–10 pages that already rank for the keywords you care about most. These might be "how‑to" guides, in‑depth resources, or comparison posts. Run each URL through a basic page scraper and extract the external links. When you combine the results, the same domains will show up again and again—those sites are clearly seen as trusted references in your space.

Those repeating domains become your first outreach list. They already link to content similar to yours, they understand your topic, and they have a history of linking out. Instead of cold emailing random websites, you focus on people who have proven they give backlinks.

Step 2: Use scrapers to uncover resource pages and "tools we recommend

Many high‑authority sites maintain resource pages, "favorite tools" lists, or curated link roundups. These pages are pure backlink gold because they exist to point visitors to useful external resources. With a scraper, you can paste in any "Resources", "Recommended tools", or "Links" URL and instantly gather all outbound links and context around them.

Once you have those lists, it becomes obvious what kind of sites they like to feature. Some focus on local businesses, some on SaaS tools, others on educational guides or statistics. That helps you decide how Backlink Phoenix—or your client's site—should be positioned when you reach out: as a service provider, a tool, a guide, or a data source.

Step 3: Scrape top‑ranking content for topic and anchor ideas

Good backlinks usually point to content that answers a specific question in depth. Scrape a few top‑ranking articles for your main topics and focus on their headings and in‑content links. The headings show you the subtopics that search engines already reward; the anchor text shows how writers naturally describe related tools, brands, and concepts.

You can use this information to design your own content and outreach:

Build articles that cover the same core questions, but with clearer structure or better local examples.

Suggest natural anchor text in your guest post pitches that fits how the niche already talks about your topic.

Instead of over‑optimizing with awkward anchors, you mirror the language that is already working.

The kind of scraper that's "good enough"

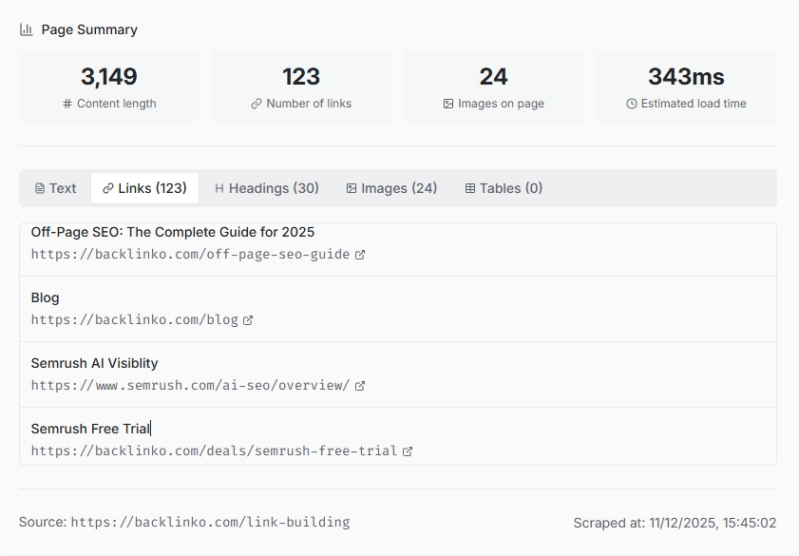

You do not need a heavy, enterprise scraping stack to do this kind of research. A basic page scraper that accepts a URL and returns clean text and links is enough for 80% of what small and medium businesses need. The key is speed and simplicity: paste, export, analyze, move on.

Quick tool tip: The free Web Page Scraper on our sister site Crazedo (crazedo.com) does exactly this. Paste any URL, get instant text + links export. Perfect for small teams starting link building.

When to move from simple scrapers to larger tools

There is a point where a basic page scraper is no longer enough—for example, when you:

Monitor hundreds of domains and thousands of pages on a schedule.

Need to scrape JavaScript‑heavy sites or protected dashboards at scale.

Want to automate reporting, alerts, and integration with BI tools.

At that stage, adding API‑based scraping or full SEO suites makes sense. Until then, simple scraping plus focused outreach can already deliver clean, relevant backlinks that move rankings, especially in less competitive niches or local markets.

How Backlink Phoenix uses scrapers inside real campaigns

For Backlink Phoenix clients, scrapers are a research engine, not the main product. First, we scrape competitor content and related resources to see how your industry links, talks, and recommends. Then we group link opportunities into categories such as resource links, guest posts, tools lists, and expert quotes. Finally, we run targeted outreach built on those insights instead of guessing. Because this process is grounded in actual pages and links from your niche, the link opportunities we pursue are realistic and aligned with what search engines already reward. It is a lean way to plan campaigns that still respects quality and long‑term safety.

FAQ

Q: Do I really need scraping tools for backlinks?

A: No, but they make you 10x faster. Manual research works, but scraping lets you analyze 20 competitor pages in 20 minutes instead of hours.

Q: Is the Crazedo scraper free?

A: Yes, completely free. Paste any URL and get clean text, headings, and links instantly—no signup required.

Q: Will Google penalize sites using scraped data?

A: No. Scraping is just research. We only use it to understand competitors, not to copy content.

Q: How many pages should I scrape to start?

A: 1-5 is perfect. Focus on top-ranking articles + resource pages from authority sites in your niche.

Add comment

Comments